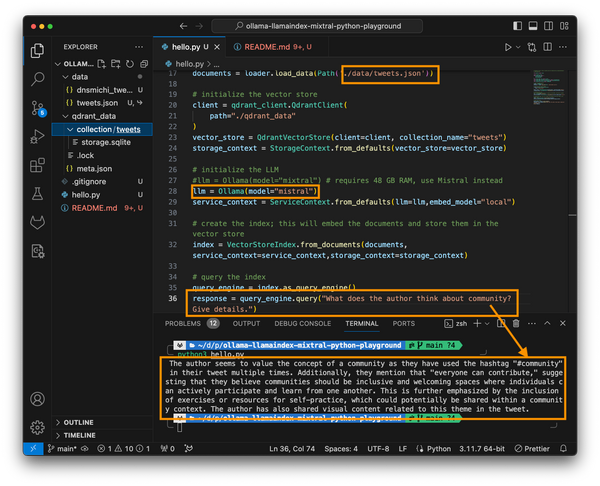

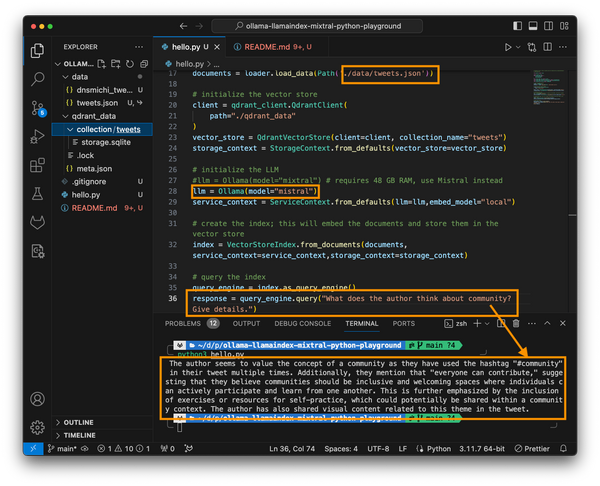

Ollama allows to run large language models locally. I was excited to learn about Mixtral, an open model, now available through LLamaIndex with Ollama, explained in this blog tutorial. The example to load my own tweets to ask questions in this unique context is fascinating.

After running Ollama, and using